[ad_1]

BBC

BBCThere’s an online forum I hope you’ve never heard of. It’s a place I hope your loved ones don’t know about either. It has tens of thousands of members and millions of posts. It’s a forum where anyone, including children, can go to discuss one topic – suicide.

But it’s not only a talking shop. There are detailed instructions on methods, a thread where you can live-post your own death and even a “hook-up” section where members can meet others to die with. It encourages, promotes and normalises suicide. The majority of users are young, sad and vulnerable.

It’s a site that gives a disturbing insight into the problems of online regulation and the state of the Online Safety Act (OSA), which has just turned one after being given Royal Assent on 26 October 2023. Its purpose was to make the UK the safest place in the world to go online.

Ofcom, the online and broadcasting regulator, recognised the danger it poses. Following one of our reports, in November last year it wrote to the site to say that under the OSA (when fully operational) the site would be breaking UK law by encouraging suicide and self harm. It advised the forum administrators to take action or face further consequences in the future.

The forum is thought to be based in the US, but the administrators and server locations remain unknown.

By way of response, administrators posted a message on the forum saying it had blocked UK users. That “block” lasted just two days.

The forum is still live now, and still accessible to young people in the UK. Indeed, my research shows at least five British children have died after contact with the site.

Demanding accountability

Ofcom concedes in private that smaller websites, based abroad with anonymous users, may be beyond its reach, even when the OSA is in full force.

But big tech is likely to find it harder to simply ignore the new legal burdens the OSA will place on platforms available in the UK. However, and this is key, before the regulator Ofcom can enforce any of these duties, it’s got to consult publicly on codes of practice and guidance. We are still in the consultation phase – and its real power now is the threat to tech firms of what might be to come.

But while the law has yet to come in to operation it may have already started to provoke real change. This huge bit of legislation is meant to tackle everything from access to pornography and terrorism content to fake news and children’s safety.

The OSA has been years in the making. In fact, you have to go back five prime ministers and at least six digital ministers to its origins. Its roots lie in a time when the public and law makers started to realise that big tech’s social media platforms held huge sway, but were not held to account.

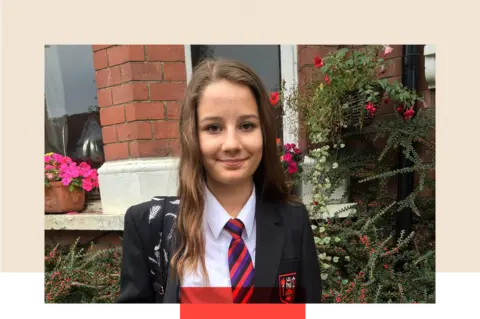

And then there was the death of the schoolgirl Molly Russell which, more than any other single story, galvanised Parliament into action. Molly’s story struck a chord. She could have been anyone’s daughter, sister, niece or friend.

Getty Images

Getty ImagesMolly was just 14 when she ended her own life in November 2017. After her death, her father Ian Russell found she had been bombarded with dark, depressing content on Instagram and to a lesser extent on Pinterest. The coroner at her inquest decided that social media had “more than minimally” played a part in her death.

She had been fed graphic, distressing content – during the inquest when it was played in open court, some people left the room.

Her family decided something had to change and Mr Russell began a campaign for a law to reign in the power of Silicon Valley.

Ian took his campaign to Parliament and Silicon Valley itself. He spoke to tech insiders, as well as Sir Tim Berners Lee – the founder of the modern Internet – and he even pressed his case with the then Duke and Duchess of Cambridge.

Getty Images

Getty ImagesIn October last year he got his wish. As he told me recently, it was a bittersweet moment.

“Seven years after Molly’s death, I remain convinced that effective regulation is the best way to protect children from the harm caused by social media, and that the Online Safety Act is the only way to prevent more families experiencing unimaginable grief,” he said.

The OSA claims to be the most far-reaching law of its kind in any country. That’s backed by the possibility of multi-million-pound fines against platforms and even criminal sanctions against tech bosses themselves if they repeatedly refuse to comply.

It sounds tough. But the truth is many campaigners say it isn’t tough enough, or fast enough. The law will actually be introduced in three phases, but only after months of Ofcom talking to government, campaigners and big tech leaders.

All these rules are about the behaviour of platforms, but it’s worth saying that when it comes to individuals and how they act online, talk has already turned to action. New criminal offences around cyber-flashing, spreading fake news and encouraging self harm went live in January this year.

But it even though the majority of the provisions of the Act are not yet enforceable, Silicon Valley does seem to be taking notice.

Silicon Valley shake-up

On 17 September, a press release popped into my inbox. It was from Meta, which owns Instagram, Whatsapp, Facebook and Messenger. Nothing to get excited about, happens all the time. Only this one was different. It was effectively announcing the biggest shake up in Instagram’s short history, the creation of specific “teen accounts”.

In short, it means all existing accounts belonging to under 18s would be moved to new accounts with built-in restrictions, including greater parental controls for children under 16. Any child signing up from that week would automatically get one of the new “safer” accounts.

The reality of the new teen accounts may not quite match up to the hyperbole of the press release, but Meta didn’t have to make this change. Was it the spectre of the OSA that forced their hand? Yes, but only in part.

It would be wrong to think that all the positive changes protecting children online have come about because of the prospect of the OSA. The UK is only one player in a global move to restrict the power of big tech. In February this year the EU’s Digital Services Act fully came into force, embedding a duty of transparency on big firms and holding them to account for illegal or harmful content.

In the US, federal legislation seems to have stalled, but there lawsuits are coming that target the biggest social media platforms. Families of children harmed by their exposure to harmful content, school boards and the Attorneys General of 42 states are suing the platforms. The suits are being brought under consumer protection laws. In these cases, the claim is that social media was designed to be addictive and does not have adequate protections for children. They are seeking billions of dollars in pay-outs.

The influence of Molly Russell’s story here is also significant. I have met some of those bringing these lawsuits and their lawyers. They all know her name. As do senior people in Silicon Valley. Long before the OSA became law, companies were starting to introduce better content moderation.

That said, Ian Russell believes much, much more needs to be done.

“While firms continue to move fast and break things, timid regulation could cost lives… We have to find a way to move faster and to be bolder”.

He has a point. End-to-end encryption means law enforcement feels it is in effect blinded when it comes to child exploitation material. If they can’t see the material, they can’t identify suspects and victims. The spread of disinformation remains unchecked on some platforms. Age verification is not yet robust. And a key emerging issue is the misuse of AI, for example in sextortion scams targeting young people.

The Act is meant to be “technology neutral” and regulates the harmful effects of any new tech. But it’s not yet clear how it deals with new AI products.

Dame Melanie Dawes, Ofcom’s Chief Executive, rejects much of the criticism.

“From December, tech firms will be legally required to start taking action, meaning 2025 will be a pivotal year in creating a safer life online.”

“Our expectations are going to be high, and we’ll be coming down hard on those who fall short.”

So, are children becoming safer online thanks to the OSA?

The reality is that the big tech platforms are transnational and only a global approach can force meaningful change. The OSA is just one part of a global jigsaw puzzle of laws and legal action.

But pieces of that puzzle are still missing and it’s in those jagged gaps where the threat to children still lies.

Lead image: Getty

BBC InDepth is the new home on the website and app for the best analysis and expertise from our top journalists. Under a distinctive new brand, we’ll bring you fresh perspectives that challenge assumptions, and deep reporting on the biggest issues to help you make sense of a complex world. And we’ll be showcasing thought-provoking content from across BBC Sounds and iPlayer too. We’re starting small but thinking big, and we want to know what you think – you can send us your feedback by clicking on the button below.

[ad_2]

Source link